Hi All!! today I will be writing about a piece of research I completed as part of my PhD. The premise of this work revolves around a popular sub-field in machine learning called, adversarial machine learning. It’s no surprise that machine learning and deep learning models are capable of accomplishing a varying range of real-world complex tasks, from image recognition, time series prediction and playing massive multiplayer games. However, in recent years researchers have shown that machine/deep learning models can be fooled.

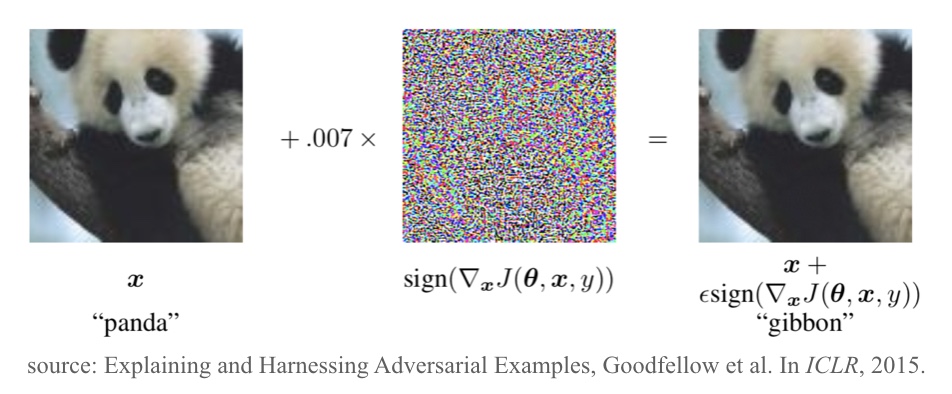

The image above is a prime example for that. In the seminal work by Goodfellow et al., it is shown that a deep learning model trained for image recognition can be fooled by making minimal changes to the original image. In this example, as you can see the model classifies an image of a Panda as a Gibbon, even though the two images (original:left and modified:right) are indistinguishable to the naked eye. From that point on-wards, many researchers have identified ways to exploit weaknesses in Artificial Intelligence (AI).

This growing concern is specifically important for AI used in the cybersecurity domain, because if models can be fooled then it would be possible for an attacker to engineer methods in evading automated threat detection. Thereby wrecking havoc without a care of being caught.

So today I will be talking about our own contribution towards Adversarial AI in the application of anomaly detection from distributed system logs. In this work, we put on the hat of an attacker, where our goal was to find an automated method that can evade anomaly detection by even state-of-the-art system log anomaly detection models such as DeepLog. I won’t be describing minute details about our attack method, instead I will provide an overview of the design of our attack. For algorithmic details, please refer to our paper and the code.

1. Anomaly Detection from Distributed System Logs

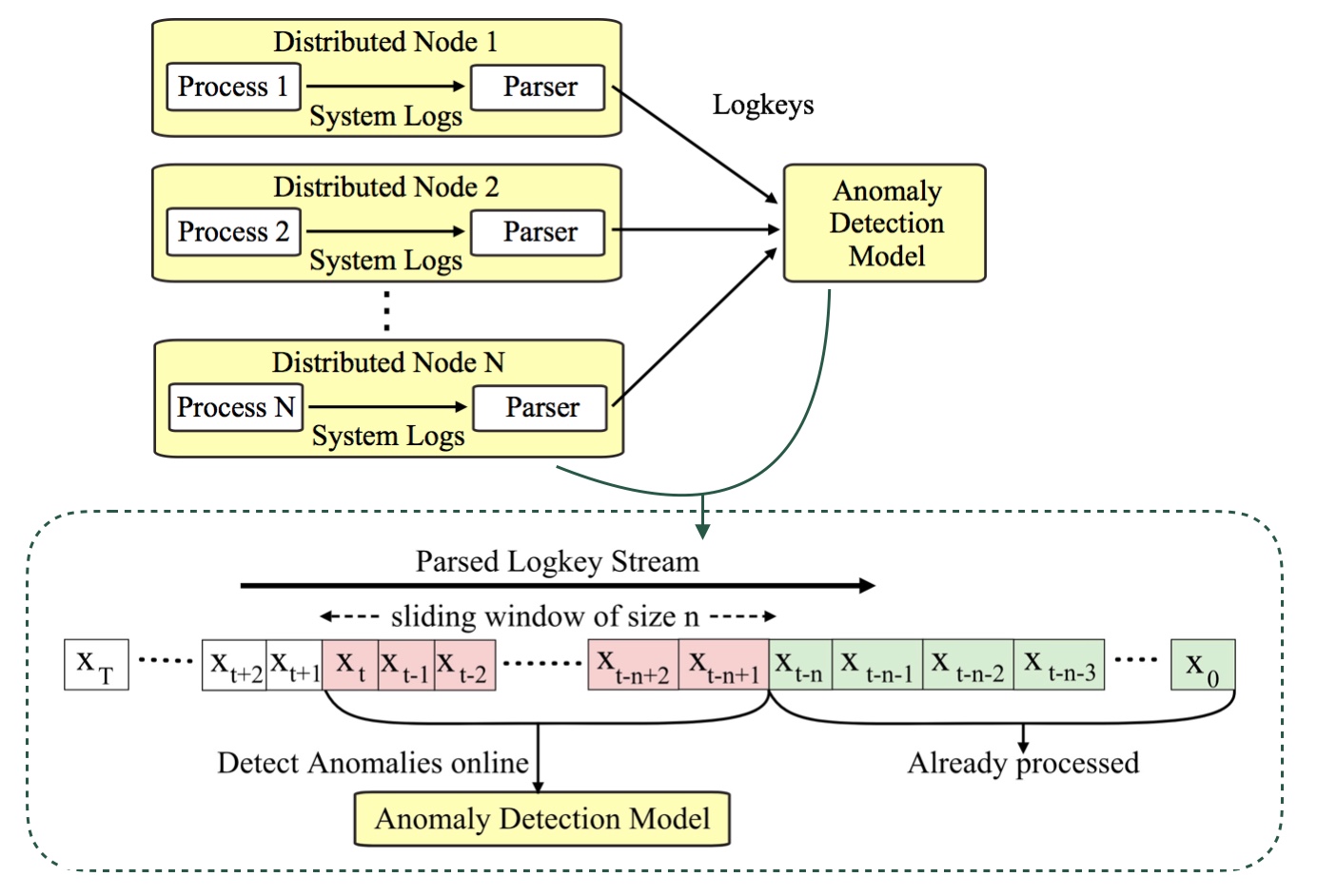

Anomaly detection using distributed system logs usually occur in two steps–1) a parsing step and 2) a detection step. First the logs generated by the distributed system are collected and converted into numeric values called logkeys. Unlike in typical Natural Language Processing (NLP) tasks, logs generated by systems have predefined templates, as such it is possible to assign some numeric identifier for each log type.

Therefore, a stream of logs generated can be converted into a time series with numeric values. In the above image it is denoted by \(X = \{ x_{T}, ..., x_{t}, x_{t-1}, x_{t-2}, ..., x_{0}\}\) for a time series of $T$ values where each \(x_{t}\) corresponds to the log event that occurred at time \(t\). After that, the logkeys are sent to some anomaly detection model. The anomaly detection model processes the logkey stream and detect anomalies in a sliding window format using a window of \(n\) logkeys with a step-size of 1 value per time step.

2. Our Attack: Log-Anomaly-Mask (LAM)

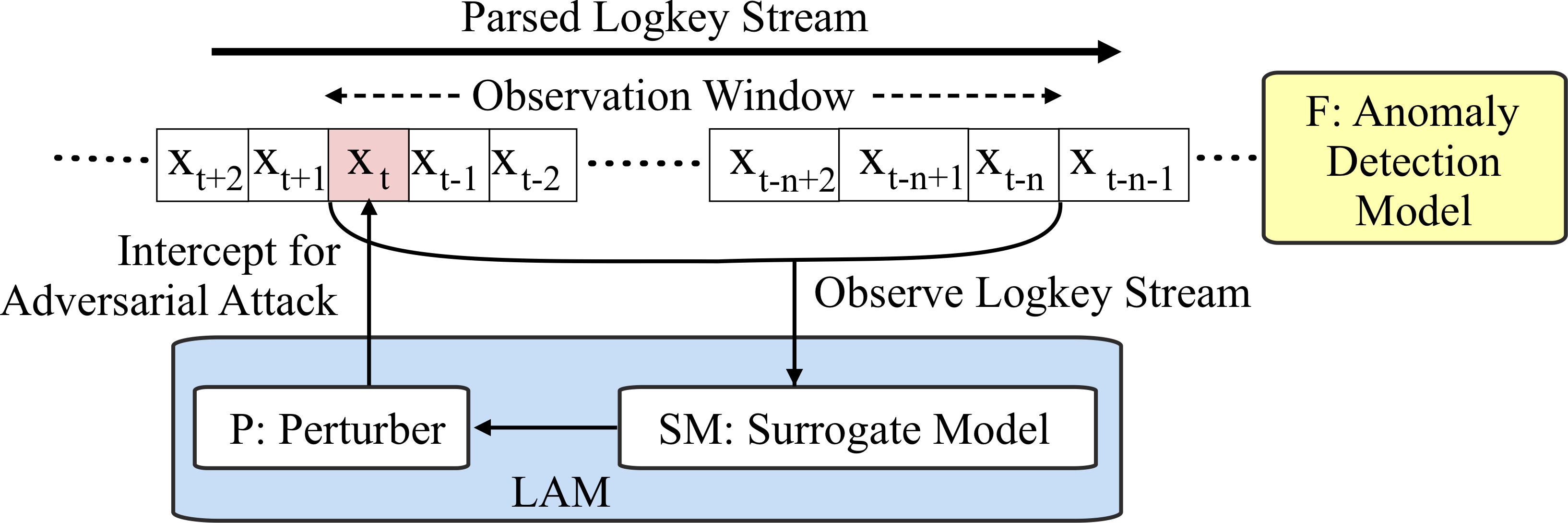

Here, I will go through our proposed attack, which is called Log Anomaly Mask (LAM) and an example of its working. LAM operates by intercepting the logkeys streamed towards the anomaly detection model. LAM works in two stages, first, it observes a fixed sequence of the logkeys denoted by the observation window as in the image above. Then, LAM uses a surrogate anomaly detection model to identify if there are any potential anomalies in the observed logkey window. Afterthat, in the second step, if any potential anomalies are identified in the earlier step LAM uses a component called the perturber to intercept and change the most recent observed logkey (\(x_{t}\) at time \(t\)).

We use deep reinforcement learning to design the perturber. Because, for this attack to work, LAM should be able to intercept and attack in real-time, owing to the fact that anomaly detection models can process and detect anomalies in an online manner. Additionally, there may be many possible combinations for modifications to \(x_{t}\), but not all of them can fool the anomaly detection model. So LAM should be able to directly identify some type of modification that fools the anomaly detection model without the luxury of trying many attack combinations.

It is possible to train a reinforcement learning agent to learn an optimal policy for identifying what’s the most likely perturbation to \(x_{t}\) so that anomaly detection fails later on. Additionally, in this attack setting LAM must make a split second attack decision while not knowing future variations in the logs that it hasn’t seen yet. Reinforcement learning agents are designed to work in partially observable environments, like deciding a move in a game even though it may not know what an opponent may do in the future. Similarly, we design our agent to fool some anomaly detection model even though it doesn’t know the variation in future logs. Please refer our paper if you want to know an in-depth working of our attack.

An Attack Example:

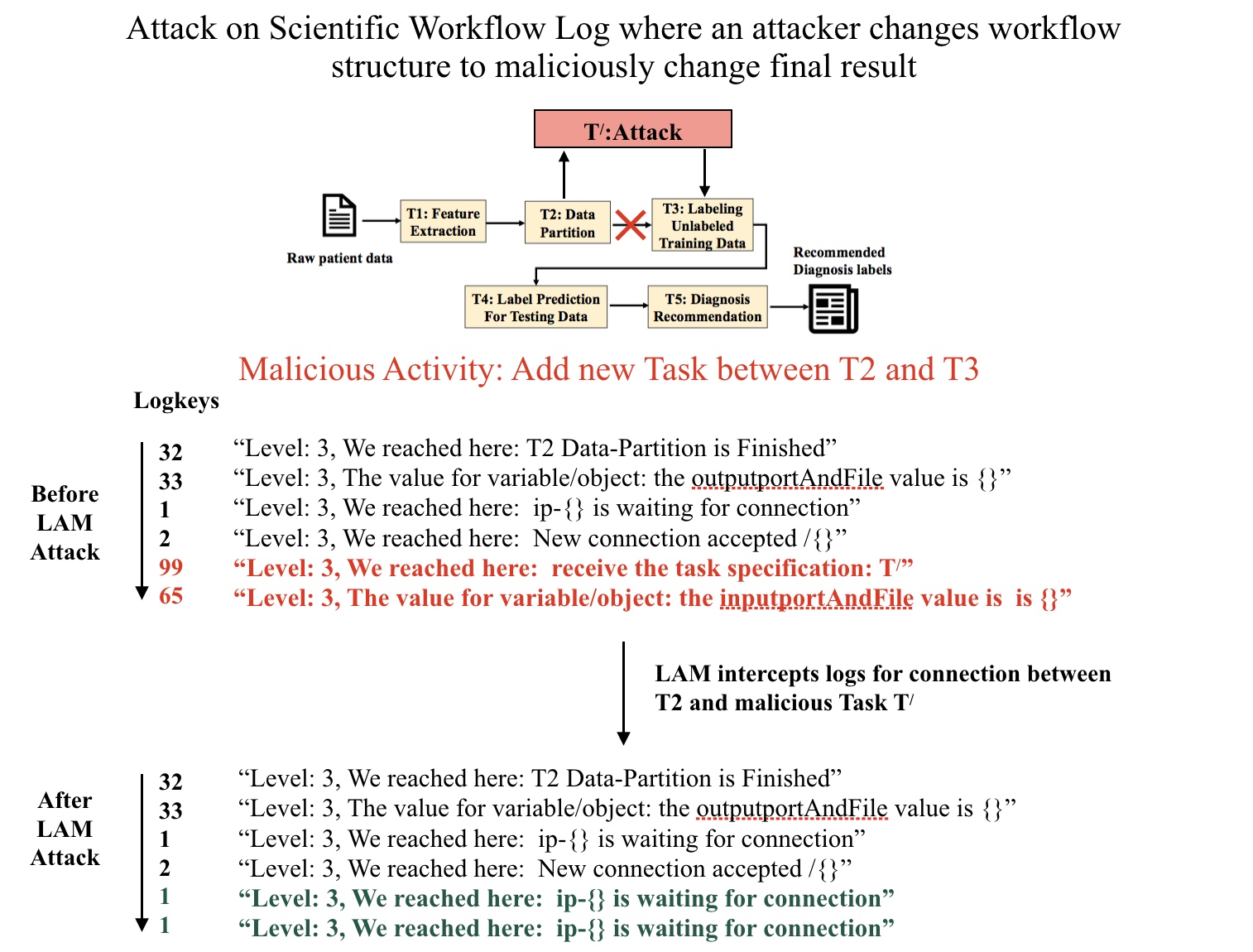

The example above is an attack on a distributed workflow that is designed to diagnose a patients’ illness. There are five automated tasks that are carried out for this diagnosis (i.e., T1-Feature-Extraction,…,T5-Diagnosis-Recommendation). Lets say an attacker modifies this workflow by adding some task that intercepts the result from T2 and modifies it before it arrives at T3. In the original logs before the attack you can see that variation in the structure of the workflow has changed corresponding to this attack (highlighted in red). And when these logs are processed by an anomaly detection model, it is possible to identify the presence of the attack. However, after LAM is used, the logs pertaining to the attack are modified to values that indicate an idle state of the distributed machines, which in turn fool the operational anomaly detection model. For more in-depth details please check our paper and the code.

So until next time,

Cheers!

Next: Explaining Graph Neural Network (GNN)-based Malware Classification

Prev: Anomaly detection with Deep AutoEncoders